In Part I we have discussed the need to spread digital

Requirements

- Google drive data needs to be synced across to other services

- Syncing is automatic and periodic

- The transfer needs to be over a secure connection, encrypted on both ends

- Transport layer needs to be open source and auditable

Contenders

DropBox + multdrive

By far the most convenient option would be to use multdrive to connect my google drive to dropbox and have it sync the data across. However, this solution is not open source, and furthermore, it’s run by a company with history of data leaks/abuse. No go.

Own server + rclone

I could deploy my own Raspberry Pi-powered server and have rclone sync the data across. By far the most privacy-friendly solution, however I’d have to manage security myself and deal with any potential hardware redundancies/failures. This solution is very inconvenient.

DropBox + rclone

Finally, I can use rclone to sync across from gdrive to dropbox. This seems like the ideal solution, with one caveat: in order to satisfy point number 2, rclone needs to run inside a box that is on 24/7 and is responsible with syncing the content. As it happens I have an Linux VM hosted in Azure which is perfect for this, but you could set this up on any server.

Implementation

rclone setup

Log into remote and install a recent version of rclone

$ curl https://rclone.org/install.sh | sudo bash

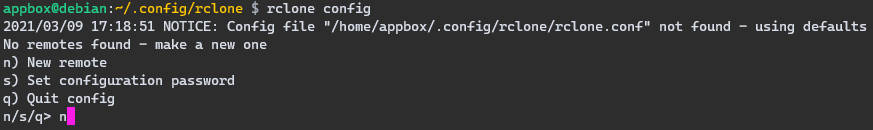

Start by configuring rclone to work with google drive:

$ rclone config

Select option 15 (Google Drive)

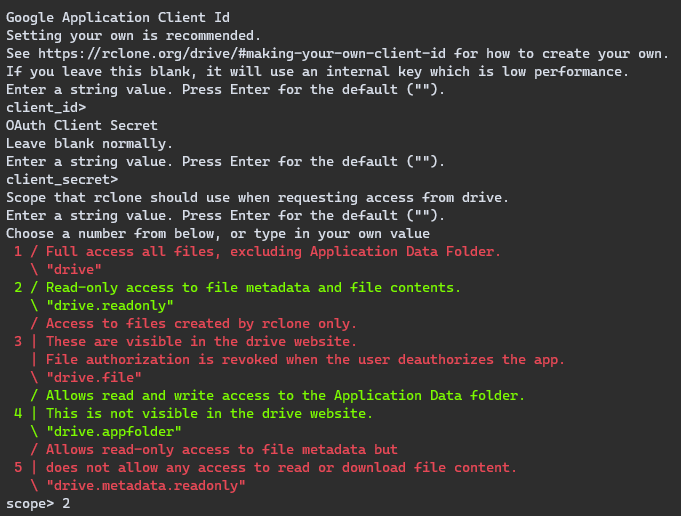

Leave client_id and client_secret blank. as we aren’t doing anything high performance. This will use rclone’s public key and generate a secret for us.

On the next dialog select option 2 - Read-only access to file metadata and file contents.

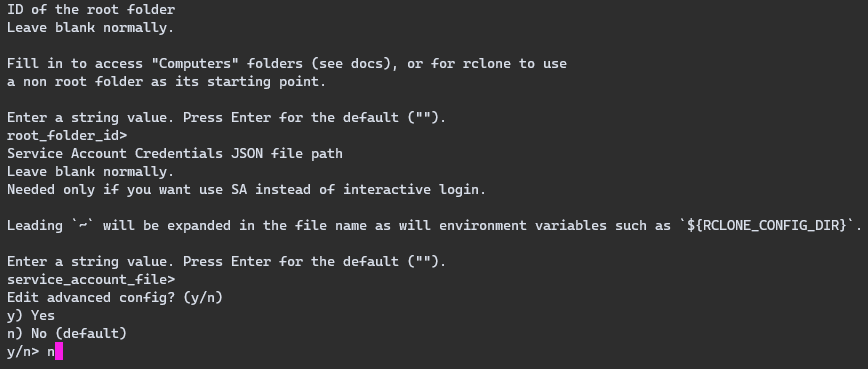

Select the default options for everything else

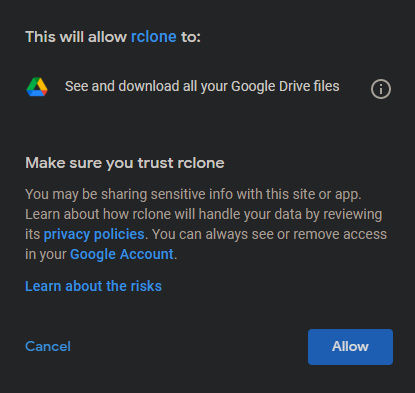

Finally, a link will pop up that requests access to your drive for rclone. If configured correctly it should look like this

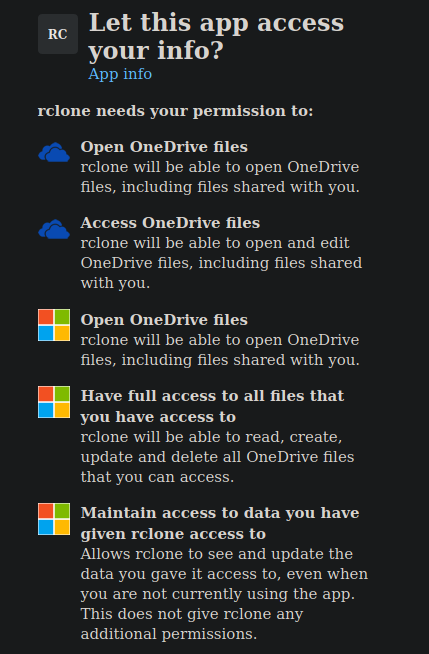

Repeat the same process over for DropBox, except this time Full access all files, excluding Application Data Folder is needed. We should end up with this:

Securing the access tokens

We have sucessfully configured rclone, but the configuration file ~/.config/rclone/rclone.conf that holds the OAuth tokens is unencrypted on the disk.

Encrypting that file is a very good idea, especially if you’re running rclone on a machine that you do not fully own. If this is not the case for you, skip this step entirely.

$ rclone config

Select set configuration password and type in a password. Your rclone config file is now encrypted.

The problem now is that rclone requires a password every time it reads the config. We could pass the password from a standard output, but such an approach is extremely unsafe. Fortunately, gpg and passwordstore come to the rescue.

$ apt-get install pass gpg

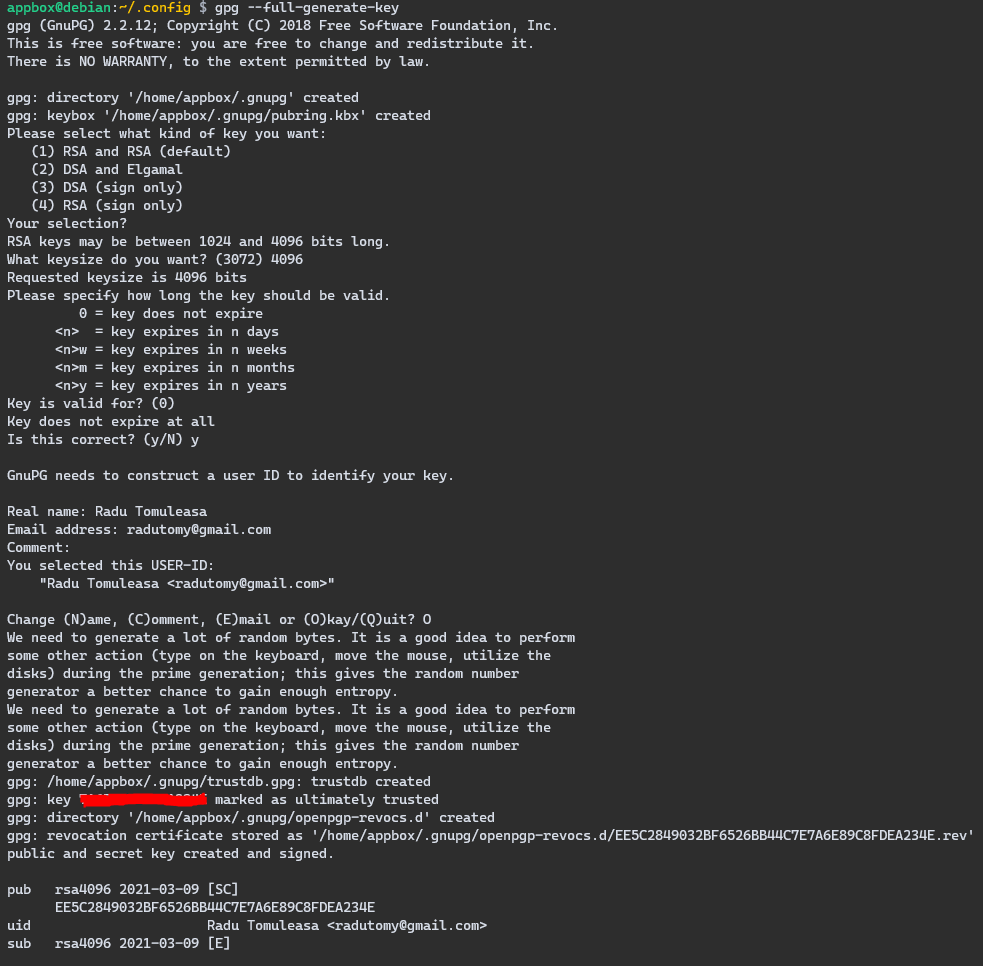

$ gpg --full-generate-key

Follow the instructions and leave everything default. Take a note of the key highlighted in red as it is needed for the next step.

After we’re done with gpg we need to securely store the rclone config password inside it the password store

$ pass init "my_key"

$ pass insert rclone/config

It will now ask you for the password we setup earlier to encrypt the rclone config file. Type that in. gpg now has securely stored the password for rclone config files.

Also set gpg password expiry timeout to something reasonable. I set mine to one year. (default is 10 minutes)

$ vim ~/.gnupg/gpg-agent.conf

default-cache-ttl 31536000

max-cache-ttl 31536000

Running rclone

The command to sync google drive and onedrive is as simple as

$ rclone syncgdrive:/ onedrive:/ --password-command="pass rclone/config"

However, we want to automate this with cron so that it runs every hour. I’ve written the following script and made it executable:

~/.local/bin/rclone-cron.sh

#!/bin/bash

if pidof -o %PPID -x “rclone-cron.sh”; then

exit 1

fi

rclone syncgdrive:/ onedrive:/ --password-command="pass rclone/config"

exit

Automating rclone with a cron job

All it’s left to do now is to put the rclone script inside a cron job to run every 30 minutes.

$ crontab -e

*/30 * * * * /home/appbox/.local/bin/rclone-cron.sh >/dev/null 2>&1

Conclusions

With the help of rclone we’ve managed to leverage a secondary